It's been just over a few weeks since Blast, the much-anticipated "L2" (it really isn't) with native yield, announced its launch, aiming to attract liquidity (now exceeding $850 million, the team knows how to shoot) to what currently operates as a multisig. While this event sparked valid criticism, I always tend to seek out the positive side of things. When I mention "positivity," it's less about the situation itself and more about getting knowledge from any circumstance. There's so much to unravel from this event— let's dig in.

George Santayana, in The Life of Reason: The Phases of Human Progress, conveyed Churchill's renowned adage: "Those who cannot remember the past are condemned to repeat it." Although the vastness of the five-volume work proved daunting (I must confess, I faltered after the second volume), its initial part, containing this quote, highlights the significance of historical wisdom. The idea lingered, seemingly abstract, until now, when I finally grasped Santayana's essence.

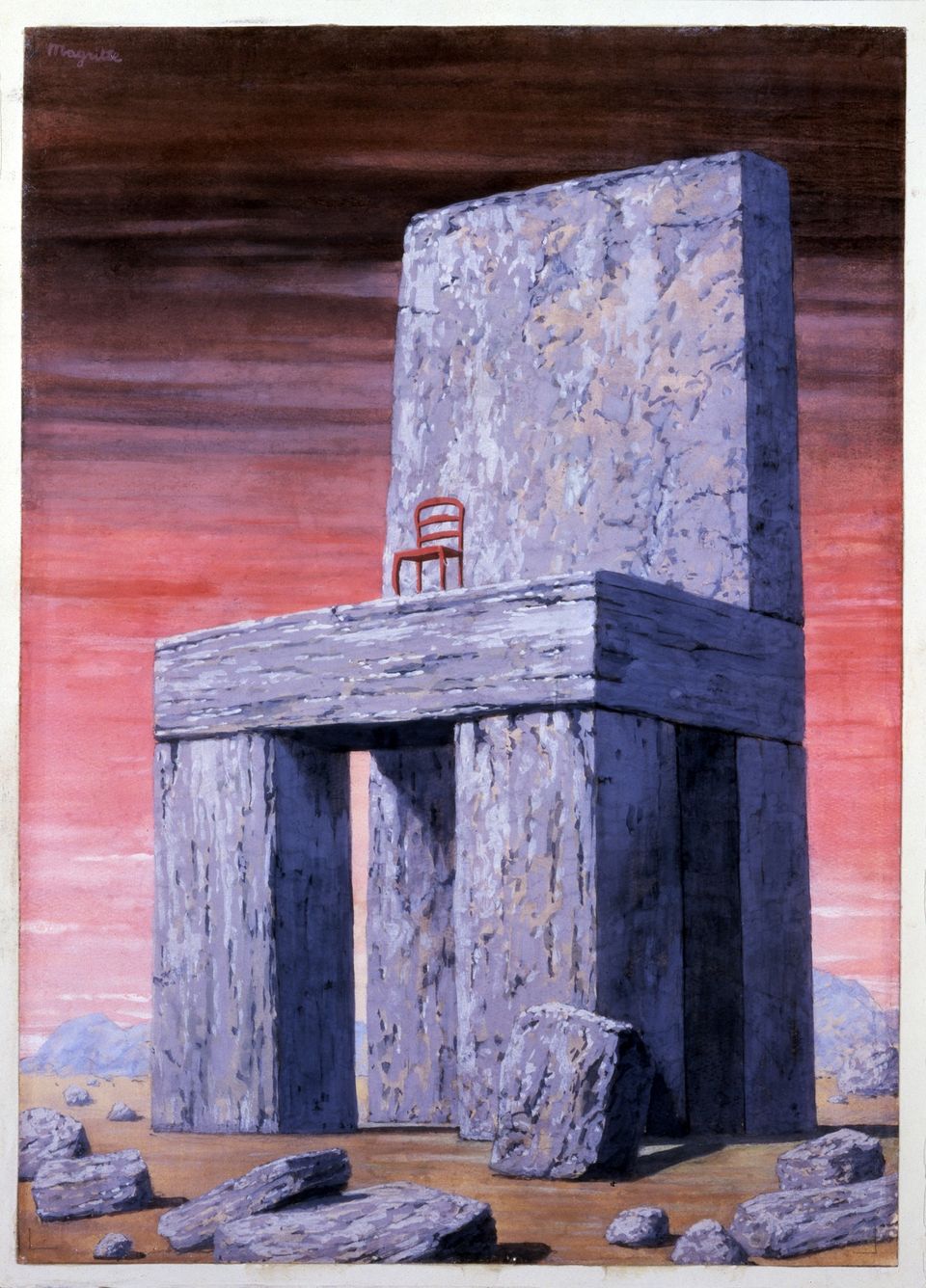

René Magritte, "Those who cannot remember the past are condemned to repeat it."--George Santayana, The Life of Reason, 1905. From the series Great Ideas of Western Man., ca. 1962

A quick look back

Human organization's roots trace back to the shift from a nomadic, hunting lifestyle to settled agriculture. This change allowed surplus food production, paving the way for specialization beyond farming. These two aspects—division of labor and individual ownership of work outcomes—form the bedrock of structured exchange. I craft swords, needing sustenance; you cultivate cereals, needing defense—we've established direct exchange. Yet, sometimes, direct exchange isn't viable, leading to the introduction of indirect exchange. If I possess a sword but wish to purchase cereals at any time, I'd seek assurance that I can do so regardless of the season. Swords hold value mainly in times of war; hence, to ensure acquiring cereals consistently, I'd exchange them for something exchangeable later. But what could that be? Food perishes, and rocks are easily replicated. Gold, silver, and copper emerge as viable options—ubiquitous, non-perishable, and challenging to fabricate. Voilà! Have we birthed the concept of money? Yes, when these materials become universally accepted for trade, they form commodity money.

Now, let's delve deeper. Collective consensus (albeit unconscious) on a particular commodity's suitability as a medium of exchange prompts standardization of its form and size, facilitating transactions. Standardization enables swift recognition of a commodity's weight, quality, and thus, its value—simplifying exchanges. Consequently, entities begin producing standardized pieces of gold or silver, adorned with stamps for easy valuation, and thus easy exchanges.

We now have commodity money standardized by a trusted entity (often the governing body), guaranteeing each piece's weight while pegging its value directly to the weight and quality of the underlying commodity it's made of. Any change in the piece's weight or quality by the trusted entity alters its value because the value of the money is derived from the commodity (despite attempts to impose consistent values on coins with lesser precious metal). However, transport issues arise as gold is challenging to move. A consensus emerges: I offer you a claim to a set amount of gold, a paper-based claim obviating transport issues, ensuring gold claimability anytime. Possessing this claim, acquiring actual gold entails a loss due to transport and security costs. Thus, holders begin using this claim to procure other goods. Gradually, this practice proliferates, as it's the most logical course of action. This marks the advent of credit money. And with credit money, banking surfaces—someone must physically safeguard the gold.

Credit money enhanced efficiency but blurred the distinction between money and its underlying commodity. In the course of history, maintaining money backed by gold (the gold standard) became a symbol of prestige for the issuer. However, realizing that maintaining the gold standard equated to controlling the money supply, issuers assumed control over issuance—this is the final transition to fiat money, our modern currency. Worth noting—every transition between money types is prompted by marginal efficiency improvements for users: from no money to commodity money, enabling indirect exchanges; from commodity money to credit money, easing transactions through enhanced transport; from credit to fiat money, engineered inflation by an entity. You might wonder how inflation is an improvement—it's not. Governments created this semblance of improvement by taxing non-fiat money use, sealing the deal.

We've barely touched on banks, which emerged with credit money. Bank history intertwines closely with money evolution, being government-owned. A detailed history of banking could consume considerable time, let's make it quick. Banks' initial purpose was safeguarding gold and ensuring a 1:1 backing for issued claims. As governments took control over money creation, discarding the gold standard, banks began backing claims with alternate collateral—often government bonds. At this juncture, holding a bill implied possessing a credit of a credit finally collateralized by an unspecified amount of gold and some collective trust in the system. This paved the way for fractional reserve banking—holding a claim on bank money backed by varying assets working to yield profits (in a regulated framework, right?).

And that's pretty much it, we've now arrived at the XXIst century, with modern banking and money.

A quick look back ... into crypto

Transitioning to digital money and crypto, everything began with Bitcoin in 2009. In 2023, Bitcoin effectively functions as our digital commodity money. It embodies the properties of scarcity, durability, transportability, divisibility, and holds intrinsic value through the stored energy it represents. However, the essence of money lies not solely in its properties but in widespread societal acceptance. While it's early to categorically term Bitcoin as commodity money, it's accepted as such in the digital realm. One could even argue that it surpasses gold as commodity money, given its ease of transport and known scarcity. However, for our reflection, this argument holds little relevance. Let's progress to Ethereum. Ethereum functions as a programmable shared-state machine. Its significance for us here lies not predominantly in its asset ETH, but in its ability to enable transparent, programmable value flows through smart contracts. Over the years, developers have leveraged this technology to transparently rebuild existing real-world markets using our new commodity money(s).

The increase in usage of these new commodity assets and their underlying network has complicated transactions due to higher gas prices. Consequently, Layer 2 solutions were created. An L2 constitutes two elements (in a very simplified model): a smart contract holding bridged assets and another preserving its state. While this simplification overlooks intricate mechanics like asset bridging methods or state transition mechanisms, it underscores L2's transformative nature—offering a marginal improvement on exchanges (as it vastly reduced costs). This implies trading asset claims rather than directly the assets. Familiar territory, isn't it?

A quick look forward

Now, the pressing query: will we come full circle, and what lies ahead? Primarily, we must recognize the catalyst that invariably propels markets from one phase to another—a marginal improvement in the exchange efficiency. This encompasses various aspects like faster, cheaper, more efficient liquidity, and broader accessibility. With this understanding, it's only a matter of time before L2s assume the role of primary exchange platforms. As comprehended, these new technical assumptions will come in with an economic transformation — the exchange of assets in the crypto realm evolving from commodities to credit assets. Consequently, crypto will shift from using commodity money to credit money. This shift signifies L2s metamorphosing into banks, with your claim on these L2s essentially representing the asset on L1, causing the gap between the ETH held on L1 and the L2 claim to widen.

That being said, it's time for you to choose your preferred path, dear anon:

Path one

One L2 starts luring liquidity with the promise that assets on the platform will have a yield-bearing backup, calling this mechanism "native yield" (when it's in reality level 0 of fractional reserve banking). Even though it's just a multisig, users stake nearly a billion dollars. This triggers a stream of ideas to other teams: You could pull in millions in TVL by creating an L2 (you don't even need to build it; Conduit will handle it for you) and offering some APRs, taking a juicy cut on them. But a 4% APR won't cut it—another team suggests 15% with no risk. After all, what could possibly go wrong? (AL2meda might be a good name for this project). Next thing you know, another team launches an L2 with a whopping 30% native yield. Seems fantastic, right? We're in the midst of a bull market, everything looks stellar, and returns are incredible. But remember, in this world, what goes up eventually comes down. Those 15% or 30% returns only happen when taking a directional risk, only one downturn will get rid of those L2s, and the regulators get in. Regulators don't need numerous failures to justify the regulations they want. Just a few signals pointing in their direction would suffice. Check out their stance on wildcat banks. Plus, L2s aren't decentralized (yet), and probably won't be as decentralized as Bitcoin or Ethereum. Looks like we're back to square one, with regulators ensuring that L2s follow the rules they set, which could go as far as taxing non-CBDC transfers on the network, censoring users, and who knows what else. They already did it years ago.

Path two

Two fundamental truths emerge—placing money in a few hands tends to end poorly, and when money is involved, principles often take a back seat. Let's be clear: crypto has shown that financial rationality governs much of our behavior, including principles. To all the Lido criticizors and Ethereum alignors, the solution to behavior alignment is only through cryptoeconomic alignment. So, to ensure alignment with a vision, it's crucial to offer motivations stronger than alternative behaviors. Trusting a group based on its ethos doesn't guarantee consistent behavior—motivations, ideas, and individuals evolve, and morality is adaptable. Now, the question arises: how can we ensure most exchanges occur on L2s with reasonable risk management, given that offering yield becomes a competitive advantage?

Trying my luck

What I'm about to discuss here represents solely my viewpoint, which remains subject to change and evolution. Nevertheless, I'll endeavor to express strong opinions, recalling a saying attributed to a former French president: "le pire risque c'est celui de ne pas en prendre," translating to "the worst risk is not taking any" (though I'd advise against employing this in crypto debates).

In my perspective, the optimal framework involves enabling "native yield" (again, it's nothing more than transparent fractional reserve banking) for those willing to assume additional risks while safeguarding those happy with holding the claim of an asset (100% backed by the asset on L1). This implies the need for distinct smart contracts on L1 based on the user's chosen mode while bridging – one for original asset holding (safe mode) and another facilitating fund allocation elsewhere (yield mode), thereby introducing additional smart contract risks. To be perfectly clear, the assets we're discussing here are short-tail assets, ETH, BTC and USDC/T, as those are the ones used as money on blockchains. From here, determining how funds are allocated from the yield mode presents several design possibilities.

Considering these assets in yield mode are (again) short-tail assets, sufficiently liquid to support derivatives, the most efficient approach to balance APR/risk tradeoffs might involve creating a market for fund management. Essentially, users, upon bridging, could select a fund manager responsible for allocating their funds, ensuring the preservation of value for their credit asset on L2 while generating yield. This is equivalent to free banking, you choose the fund manager you trust the most, or with the risk parameters you think are best, and get his credit money on L2. For instance, you bridge ETH on an L2, choose Gauntlet as an asset manager, and get on L2 gETH, which is nothing more than ETH "issued" by Gauntlet – except unlike free banking, you can see where the funds are allocated and have better risk management.

What I'm essentially proposing is to split the risk related to assets from the technical risks associated with L2s. Picture this: presently, when you bridge onto an L2 network, you turn to platforms like L2Beat to gauge the technical risks involved in bridging your funds there. Since most L2s today offer credit assets backed 1:1 on L1, you're not exposed to any credit or counterparty risk, making your risk assessment primarily focused on technical aspects.

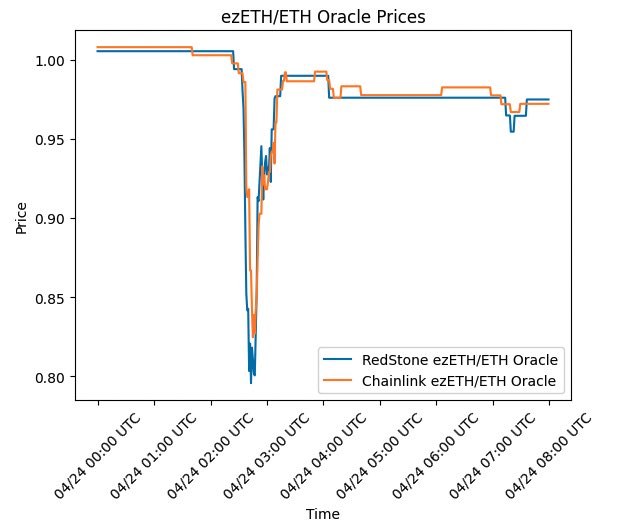

Now, consider holding ETH on Blast and ETH on Starknet. You encounter varied technical risks because they operate on different technology stacks. But beyond that, there's also diversity in credit risks. Your ETH on Blast is collateralized with stETH. And this complexity is only going to increase over time. The concept I'm proposing involves establishing a standardized interface between asset managers and L2s. This approach allows each party to specialize in their domain, enabling users to have a clearer understanding of associated risks. Here's a visual representation of it:

Establishing this fund management market on L1 introduces complexities on L2, akin to the challenges faced during the era of free banking when banks issued their currencies, leading to complexities for market participants. Remember, our objective is to construct the most efficient market, advocating for an unopinionated approach and open markets. Hence, accommodating multiple credit assets at this level is acceptable, allowing the market to dictate the most suitable one for its use. The complexity introduced by competition between "money issuers" is what enables us to credibly create better money, I encourage you to read F.A Hayek's

paper on the de-nationalization of money if you want to go further.

This design achieves the separation of asset risk from the technical risk of the architecture. Cryptography engineers and financial engineers possess distinct skill sets. Additionally, shared liquidity on L1 becomes a reality, accessible across all L2s. Providing a common interface for L2 asset holdings allows both ecosystems (asset managers and L2 builders) to progress independently, optimizing their products without being constrained by the other ecosystem, akin to how TCP/IP decoupled application builders from network providers.

Now, let's address potential challenges with this model. The primary concern is asset fragmentation, wherein assets possess varying risk profiles, making it challenging for L2 applications to manage these diversified risks. However, with the rise of risk analyzers, oracles, and blockchain transparency, this issue might be effectively addressed. Another issue is the potential for an asset manager to attain a monopolistic position, contradicting the system's intent, which relies on competition to ensure efficient management. This remains an unanswered question, one that warrants a separate discussion – your thoughts on this matter would be appreciated in the comments.

In conclusion, the crypto ecosystem finds itself at the crossroads of "this time is different". Creating a system revolutionizing our monetary interactions has been a painstaking process, and while we're not there yet, external challenges loom large. This journey isn't just about looking back; it's about charting a course toward a future where money becomes fairer, more efficient, and way less complex (starting with inflation). So, let's stride forward, keeping our eyes fixed on a world where money isn't just a thing of the past, but a bright beacon guiding us toward a better way to trade and share value. We have to remain focused on crafting a superior alternative to fiat money for society.

Which can be modelized by the function P(t) below, with P0 the initial population, r the growth rate and t the time.

This, is compounding, and it translates into an exponential variation. And it applies to many subjects because knowledge compounds. Now the question is why can't we have some sort of compounding in money, or organizations? And my answer would be it is because everything is highly subjective and thus subject to complex consensus, and politics. Thus no compounding is possible, because compounding comes from the specialization and the use of previous knowledge, which is not possible here, because of the slowness of the consensus, and the fact that nothing is specializing, as politics are moving around, and always changing directions.